AI bias isn't going away anytime soon

Another day, another example of artificial intelligence judging people unfairly based on their appearance.

Another day, another example of artificial intelligence judging people unfairly based on their appearance.

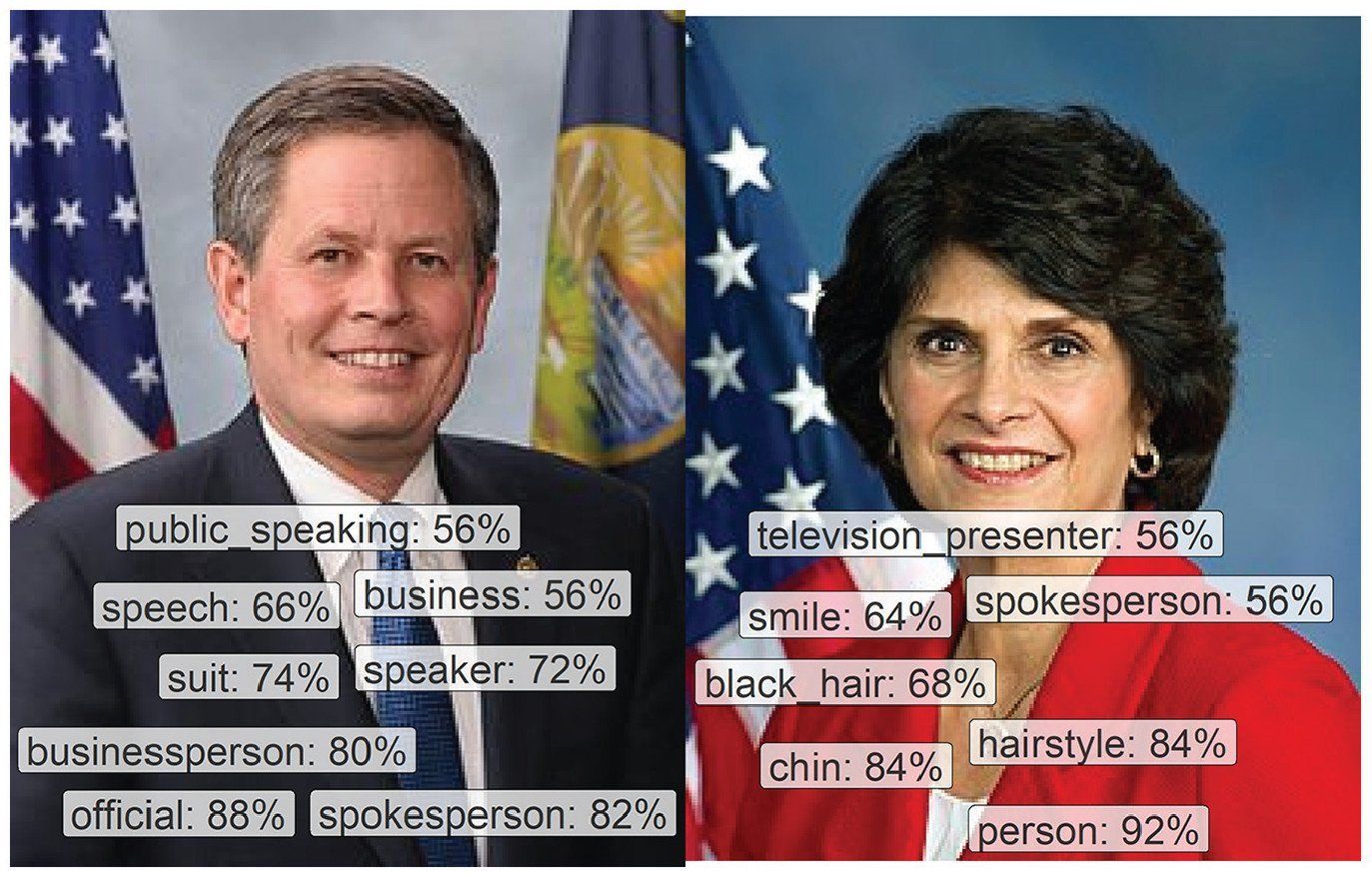

This time around, researchers found that AI technology analysing images of US members of Congress made drastically different word associations depending on whether the subject was male or female, according to WIRED.

Men were more commonly associated with traits such as "businessperson" and "official, whereas women received a higher proportion of appearance-based descriptors such as "hairstyle" and "smile".

Now bloody AI is telling women to smile? Yikes…

It's not just a one-off example of AI favouring one group of people over another, as the recent study — published as Diagnosing Gender Bias in Image Recognition Systems — found consistent examples of bias across Google Cloud Vision, Microsoft Azure Computer Vision, and Amazon Rekognition.

This follows a recent Twitter experiment testing how the social media platform automatically previews images that don't fit the 2:1 ratio it natively displays.

In this instance, Twitter user Tony Arcieri used various images with Republican Senator Mitch McConnell alongside former President Barack Obama to see whose face was previewed more often.

And who do you think the Twitter algorithm picked? If you guessed the crusty old white dude, you're absolutely correct.

There are so many factors at play with AI bias, including how algorithms are programmed, what definitions and parameters are included, and what data is used to 'teach' the AI.

As it currently stands, AI technologies clearly need more work to more equally represent women and people of colour.

More studies like this pointing out discrepancies in how AI interprets data is a good start, which will hopefully lead to women not being defined by their hairdo.

Byteside Newsletter

Join the newsletter to receive the latest updates in your inbox.